Is ChatGPT reliable? This question is likely of interest to everyone who has ever tried to use this program. This sensational artificial intelligence app impressed everyday Internet users and experts alike. Millions immediately rushed to experience the chatbot’s capabilities, and since then its user base has grown many times over.

Our Custom-Writing.org team has also become remarkably curious about whether ChatGPT can be trusted.

Here, you’ll see the results of our research and experiments, along with analysis and explanations:

- You’ll take a detailed look at the different areas this program can work with.

- You’ll understand where it succeeds and where it performs worse.

- You’ll get some useful advice on how to efficiently use ChatGPT.

🖥️ Is ChatGPT Trustworthy?

The short answer is no; moreover, research shows that it’s getting worse over time. New research regarding most programs that run on OpenAI’s ChatGPT language model shows disappointing results. The experts note that chat responses have a form that is very close to human answers over time – however, the trustworthiness of the information is questionable. Another interesting fact is that no one, including the developers, can concretely explain why AI has deteriorated.

One of the groups that presented their research was a team from Stanford and the University of California, Berkeley. Their experiments showed that AI tends to give answers of varying accuracy and fails to cope with specific tasks. Thus, ChatGPT had variable success in solving math problems, visual reasoning, answering ethically sensitive questions, and generating code. Experts believe that we’ll soon see the consequences of the deterioration of language models. For example, this was previously written about in the JMIR Medical Education journal by researchers from the New York University. Their study was mainly motivated by the chatbot’s privacy problems and tendency to make things up, generating false answers.

The deteriorating performance of LLM programs is becoming troublesome in the fields of business, medicine, and math. Business Insider wrote that some users expressed displeasure with ChatGPT’s performance on the OpenAI developer forum.

💻 Is ChatGPT Reliable?

How reliable is ChatGPT? It is challenging to give an absolute answer because it can do an excellent job analyzing credible sources but is hallucinating if it needs more data on your chosen research topic. That is why we don’t recommend relying on a chatbot for all of your tasks. After reviewing the studies described above, we decided to test just how accurate and reliable ChatGPT’s answers are. Further, we will explain the areas where it succeeds and where it cannot be trusted.

For Essays

Strengths

When writing any papers, ChatGPT performs its functions quite well. The AI can help you create an outline for your paper, give you writing tips, and explain obscure concepts. It can even write essays that you can use for inspiration and education.

Here are some great ideas on how you can use a chatbot for your paper:

- Creating a topic. You can provide your subject area or keywords, based on which ChatGPT will offer you a list of ideas for your essay. You can also narrow the topic of your paper or expand it.

- Making an outline. If you’ve decided on the topic, you can use the chatbot as an essay outline generator. Ask it to make a rough plan of your paper and structure the sections according to your requirements.

- Editing and proofreading. Once your essay is written, you can ask ChatGPT to proofread it for grammar, punctuation, and coherence. Moreover, you can improve the style and tone of your essay.

As you can see from this example, ChatGPT performs adequately when given a prompt of this nature. It is a good starting point for getting ideas for essays and developing them further.

Weaknesses

Given that ChatGPT is a language model, its success in producing written work may not come as a surprise. Many people praise it for its coherent speech and proper grammar. But even where the model succeeds, the AI can still make obvious mistakes.

It becomes apparent when one looks at examples from our experiment:

As would be obvious to most people reading the word “scintillate”, the letter “R” does not appear in it a single time. However, ChatGPT seems to think otherwise. In fact, it is so certain in its answer that when prompted further, it proceeds to give a completely nonsensical explanation. This behavior is not limited to spelling errors – ChatGPT also struggles with grammar.

At first glance, the statement in the prompt does seem to be correct. However, anyone writing academic essays or business papers should be able to spot the grammatical error. In the English language, we do not refer to a business as a “he”, “she”, or “they” – a company or a business is always an “it”. Therefore, ChatGPT should have suggested to change the word “their” to “its”.

Despite the fact that OpenAI’s chatbot is an LLM, it still makes obvious mistakes when it comes to language. Our experiments have demonstrated how essential it is to take the time to proofread any example essay, summary, or outline written by ChatGPT.

For Research

Strengths

ChatGPT has excellent features for finding all sorts of information. It can tell you which sights to visit abroad, recommend a dinner recipe, or answer a question for a history essay. It also has no problem writing an article or a summary of a literary work and it is a great aid in research.

Its main functions include:

- Customizing the research to the audience. If you know the people that are going to be reading your paper, the chatbot could adjust your content to appeal to your target demographic. ChatGPT can draw on age, gender, hobbies, location, etc.

- Finding weaknesses in a text. You can enhance your work by asking ChatGPT to review your material. Also, you can ask for additional information to enrich and deepen your research.

- Copying the tone of reputable sources. ChatGPT can rewrite your text so that it looks more professional. You can choose some well-known publication or a ready-made study and ask the AI to replicate its tone and style.

- Analyzing statistical data. It can be challenging to deal with a vast number of surveys and results of calculations on your own. However, for AI, it’s a matter of seconds. It can also assist in drawing conclusions and interpreting complex data sets, constructing graphs, and identifying patterns.

- Summarizing information. ChatGPT will quickly summarize even the most complex concepts in a long text. The next time you write a research paper, you can ask it to provide an overview of some literature, technical topics, or serve as a notes generator.

Further, we’ll show a couple of examples.

In the first one, we asked the chatbot to generate research questions, and it gave a pretty relevant answer. The second task is summarization.

Weaknesses

ChatGPT’s results are often quite accurate. However, there have been notable cases where the AI has produced incorrect or completely made-up information. There are many such reports on the Internet, from blog posts to articles. We took a closer look at one of them and decided to test it ourselves.

This experiment was conducted by the writer Susanne Dunlap. It aimed to examine how well ChatGPT would do with a specific and narrow topic. Can we trust the facts that the chatbot gives us? The writer chose the history of the Biddeford Opera House in Maine.

The researcher asked ChatGPT to share the history of the opera house in the 19th century. While it gave an extensive answer, some information seemed dubious:

- When did Enrico Caruso perform at the Biddeford Opera House?

- What was the date of the fire at the Biddeford Opera House?

When Susanne Dunlap entered the first prompt, ChatGPT informed her that the famous tenor performed at the opera house once on October 22, 1909. The researcher then proceeded to investigate further. However, she couldn’t find any information online to either verify or disprove the chatbot’s claim.

We took it upon ourselves to ask the same question once again.

Our test led to a completely new date – November 5, 1916. It is important to note that the official website of the opera house has no data about any Enrico Caruso performance at the Biddeford.

Upon further prompting, ChatGPT did not correct its error – it simply doubled down:

Such an answer is even more suspicious, as we still haven’t gotten the date ChatGPT provided to Susanne Dunlap during her experiment.

Moving on to the second part of the experiment, we decided to ask about the fire at the opera house. When Susanne Dunlap questioned ChatGPT, it told her that there were no fires at Biddeford. When our research team entered the same prompt, we got this result:

Once again, we have an unexpected result! The date that the chatbot gave us was February 17, 1932 – a result that once again didn’t match Susanne Dunlap’s. However, the discrepancy in answers isn’t the only issue in this case. On the official website dedicated to the history of the Biddeford Opera House, the year of the event is specified as 1894.

Susanne Dunlap’s experiment and our own verifications have proven that ChatGPT cannot be entirely trusted with facts. Therefore, any information received from this program should be carefully double-checked and verified.

For Math

Strengths

First and foremost, ChatGPT is, of course, a language model. This means that it will struggle more when working with numbers rather than with text. Even though it’s clearly not a calculator, this AI program can still solve simple mathematical and logical problems.

As you can see, ChatGPT has managed to handle an easy task for 5th-grade pupils. This is unsurprising because it is a language model that understands word problems better. But what happens if you give it a multiplication exercise?

The chatbot successfully solved an easy task for the 3rd grade and described each calculation step. Such capabilities make it a good math tutor to explain some simpler exercises.

Weaknesses

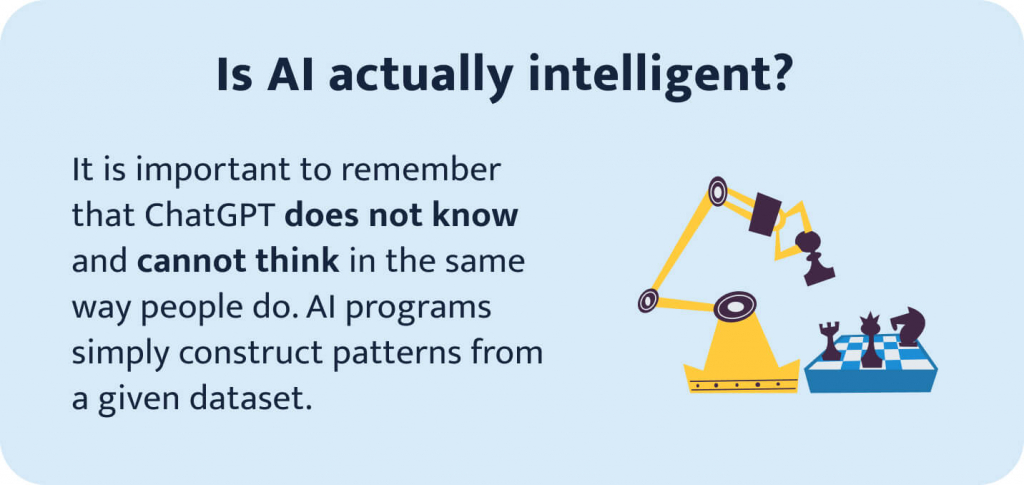

And yet, mathematics is probably the weakest of ChatGPT’s qualities. And its math abilities are getting worse, especially when it comes to higher mathematics.

To investigate this issue, researchers conducted studies looking into ChatGPT’s capabilities over time. They observed significant variations in the performance when determining prime numbers. The latest version of the chatbot initially excelled, correctly identifying prime numbers 97.6% of the time in March. However, three months later, its accuracy dropped sharply to 2.4%. The investigators attributed the unstable performance to the fact that ChatGPT was exposed to a large and diverse data set during training, which could have resulted in it learning inaccuracies over time.

Some researchers have focused on creating natural language processing algorithms to solve simple math problems. These require multistep inferences, such as transforming words into equations. It was tested whether ChatGPT could handle such processes and was concluded that it could not, due to its limitations as an LLM. Put simply, OpenAI’s chatbot was not created for the purpose of mathematical calculations.

For Coding

Strengths

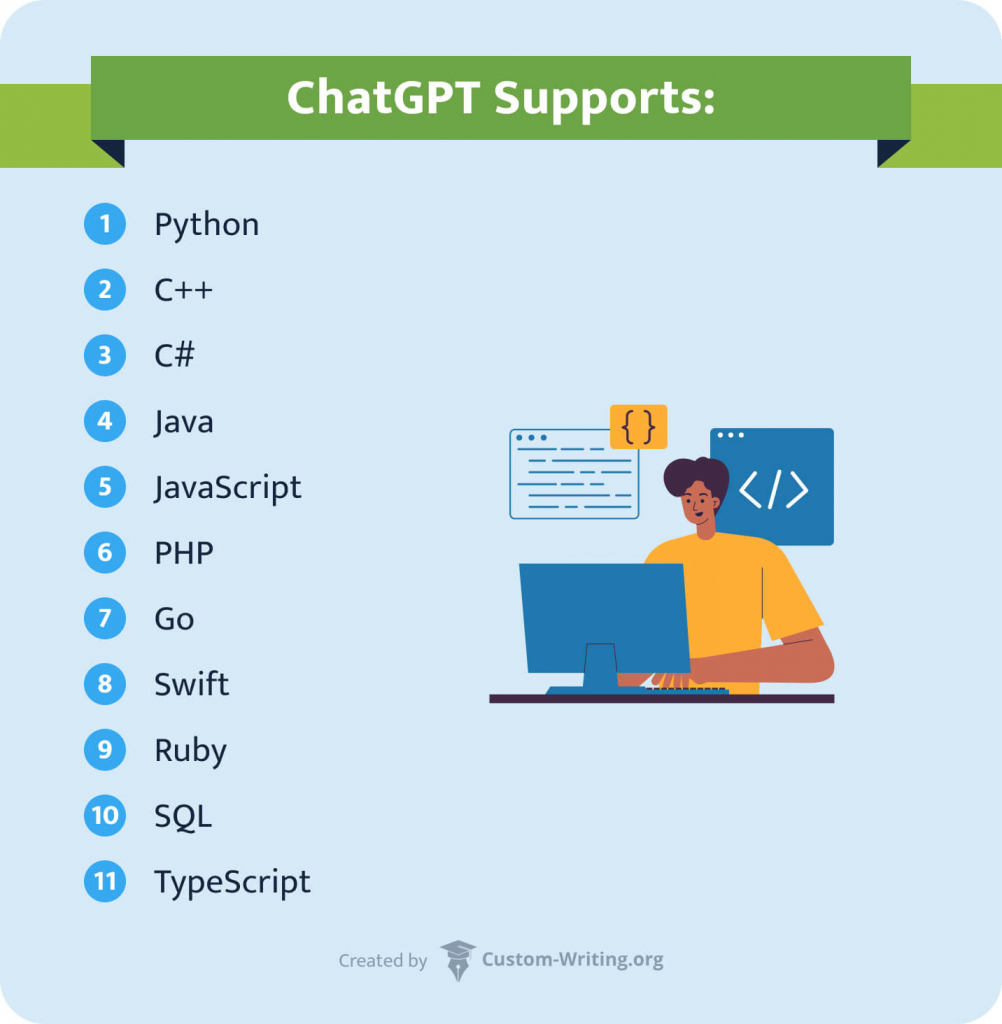

ChatGPT has proven itself quite helpful in the field of coding. This program has already assisted a lot of programmers in fixing, finishing, and improving their codes.

Coders often turn to the assistance of the chatbot to perform everyday coding tasks. Some have reported positive experiences generating algorithms or machine learning models. ChatGPT was trained on a massive database of texts that also contained code snippets in various programming languages.

All you have to do is to compose a concise and understandable prompt for the program to follow. Your instructions will directly determine your result with ChatGPT and whether you will have to finalize the code generated by LLM. Such functionality can significantly improve your productivity.

Weaknesses

ChatGPT may be a phenomenal assistant – but will it replace human developers? We have an unambiguous answer to this question. At this stage, the LLM is not capable of functioning at the same level as a person. The reason is that generating code is only half of what makes a successful developer. ChatGPT still lacks creativity and context awareness to be truly competent.

Let’s take a closer look at its shortcomings:

- Understanding the context. Every project has its own goals, constraints, and requirements. Also, the coder must consider the specifics of the design and think about the bigger person. An AI program is less likely to connect different things together without breaking something in between.

- Database limitations. It’s no secret that ChatGPT doesn’t have access to the Internet, which means it can’t keep up with the times. The data doesn’t cover all the necessary languages and frameworks. So, in specialized fields, the chatbot is powerless to assist.

- Lack of logic. Even though AI strives to give the perfect answers, it can still make logical errors and inaccuracies. Therefore, each generated code needs careful verification.

- Cyber security and privacy issues. ChatGPT will save each of your requests as data on which it learns. This means that your information may become publicly available, which could have serious implications for the security of your code.

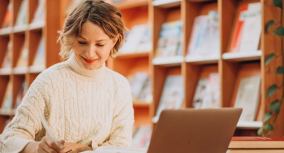

🔎 Why Does ChatGPT Have Accuracy Issues?

Despite the fact that we call ChatGPT “artificial intelligence”, this program can’t actually think. The chatbot can only use its stored data to generate its responses. The developers are working hard to increase its quality – but still, many factors influence the accuracy of ChatGPT:

- Data used for training. Unfortunately, ChatGPT cannot check the data for its quality and truthfulness. So, when getting an answer to your question, you cannot be sure that it was created based on relevant sources. You will have to double-check the information for yourself. This is especially true for specialized fields and narrow topics.

- Clarity of the prompt. The clarity of your prompt’s wording directly affects the quality of the outcome. You’ll get an ambiguous or incorrect answer if you miss context or ask an unclear question. Try rewording or paraphrasing your prompts to get better results.

- Specific vocabulary. ChatGPT is capable of understanding some specific terminology, but excessive use of it can be problematic. If you consistently use technical words or jargon, it might make it harder for the program to understand your prompt.

- Biased attitudes toward things. The LLM has no opinions, judgements, or understanding of morality. It does not think – its database guides it. This has caused ChatGPT to fall victim to different types of prejudice and bias due to the diversity of opinions on the web.

- Generative nature. AI-generated content can be retrieved quickly and easily. However, the quality of these materials is often questionable. Since the chatbot relies purely on your short prompt, it may produce meaningless results due to inaccurate instructions. Therefore, the more details you have, the more relevant the answer will be.

- Limited access to information. ChatGPT is not the best research assistant. It doesn’t have access to studies published after January 2022. Moreover, it cannot give you a list of sources that it used to generate your response.

- Users’ engagement. OpenAI engages chatbot users to provide feedback on ChatGPT responses. However, these opinions do not always enhance the situation, as they can be subjective. As a consequence, this can degrade the quality of an already inaccurate response.

Can ChatGPT Correct Wrong Answers?

As was stated above, ChatGPT’s developers are constantly working to upgrade this program. With each new version, the quality of the chatbot changes, and not always for the better. In some areas, the program becomes more accurate, and in others, on the contrary, it works worse than before. But can ChatGPT correct its mistakes if you point them out?

We have asked ChatGPT a question that, in general, should not cause problems for a human to answer. However, the AI made several glaring mistakes. We proceeded to point this out to the chatbot:

We pointed out the mistake to the chatbot. It agreed with our remark and gave us a new output. This answer looks much better – however, there is still a mistake, as the word “illness” doesn’t fit the initial prompt. From this example, we can conclude that ChatGPT is capable of correcting its errors, and yet it remains imperfect.

📊 How Accurate Is Chat GPT Compared to Other Chatbots?

As our research has shown, ChatGPT is not the most reliable tool. It often makes mistakes in its outputs and cannot provide a list of resources. But how does it perform compared to other popular chatbots?

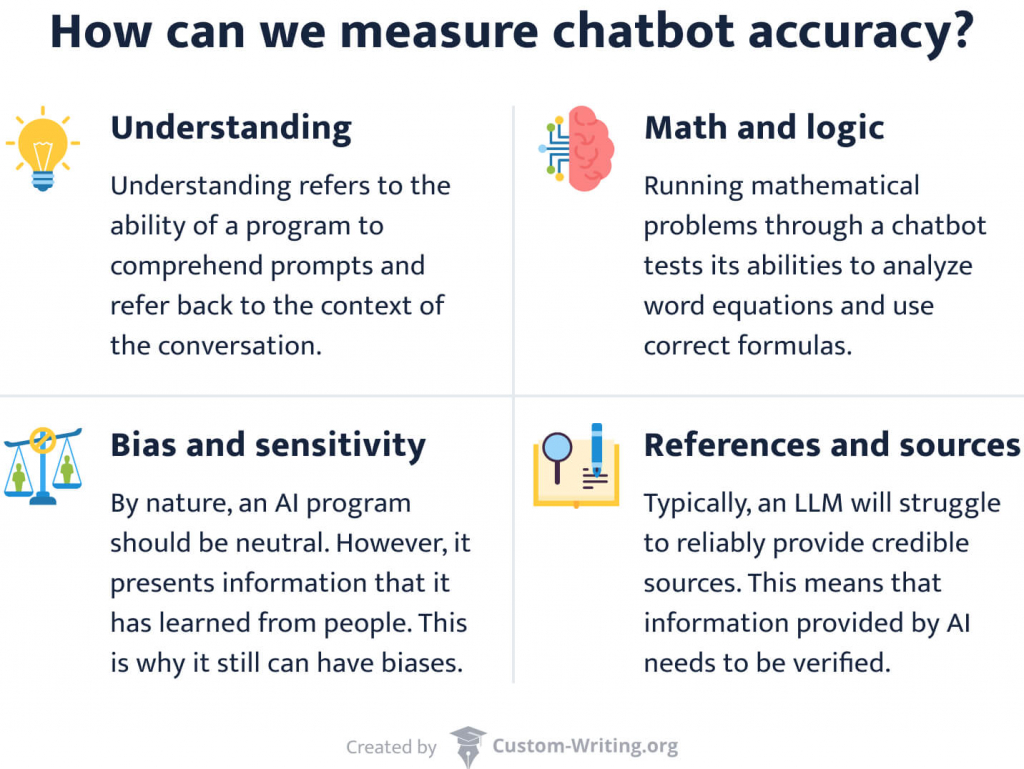

- Understanding

ChatGPT. ChatGPT performs the best in the aspects of comprehension and performance. It can handle long queries with detailed instructions while giving equally lengthy responses. It’ll also draw on the context of your conversation and will consider previously provided instructions and details.

Other chatbots. Most other chatbots have issues with multitasking and excessive detail in the prompt. For example, HuggingChat doesn’t take context and previous queries into account. It often gets confused and gives incoherent answers. Bing Chat can take into account long instructions, but it has an issue with brevity, as it conserves its processing power. - Math and Logic

ChatGPT. These are perhaps some of ChatGPT’s biggest challenges, as it struggles to handle medium to high-complexity tasks. This is due to the peculiarities of the language model. Even after the latest data update in 2023, you’ll still see problems solving math problems.

Other chatbots. You can test the ability to solve math and logic problems with Bing and Google Bard. Unlike ChatGPT, they are connected to the internet and use appropriate search engines to generate the correct answer. Therefore, they are much better in these fields than OpenAI’s chatbot. - Bias and Sensitivity

ChatGPT. In this aspect, ChatGPT is probably one of the most flexible chatbots. It allows users to ask and answer provocative questions, although the new model avoids giving opinions. With the right prompt, it is also possible to create potentially harmful content.

Other chatbots. Bing Chat indiscriminately rejects potentially inappropriate requests that are gendered or political in nature. This is the same approach that Microsoft Tay took after the racial slur scandal. - References and Sources

ChatGPT. ChatGPT will never give you accurate references to its responses, making it challenging to work with the data. It is also prone to so-called “hallucinations” if it does not have enough information on a subject. This is due to its database limitations.

Other chatbots. If you use Bing Chat for research, it will provide data without interpretation but it will have links to its sources. You can also try the Bard chatbot, which can provide up-to-date extended answers thanks to internet access but will not provide a reference list.

📚 A Review of ChatGPT’s Features

ChatGPT is a large language model (LLM) created by OpenAI. It has been trained on a massive amount of textual data from the internet that extends up to January 2022. This allowed the chatbot to communicate with users in a human-like manner. As we saw from our article, ChatGPT has its special pros and cons, but one thing is certain – it can handle almost any task, from a simple conversation to writing functional code.

Let’s summaries its capabilities:

- Answering questions.

The ability to understand and generate content allows the chatbot to hold a conversation with its user. It can explain complex concepts in a simple and accessible format, which opens up opportunities for individualized tutoring. - Translating text.

Operating over 570 GB of data, the chatbot’s language model has a vast vocabulary of different languages. This means you could try using it as a translator. - Fixing and writing code.

ChatGPT can write code in multiple different languages, such as Python, Java, etc. Additionally, its ability to constantly learn and improve helps it correct other people’s coding errors. - Writing an academic paper.

ChatGPT can learn from the templates of other essays, scientific papers and articles to create unique works. You can get original content that you can use for inspiration and in your research. It can even serve as a poem analyzer! - Solving math problems.

You can solve your math problems step-by-step and ask the chatbot to explain them in the most understandable way possible. However, it still struggles with numbers, especially when it comes to geometry or higher math.

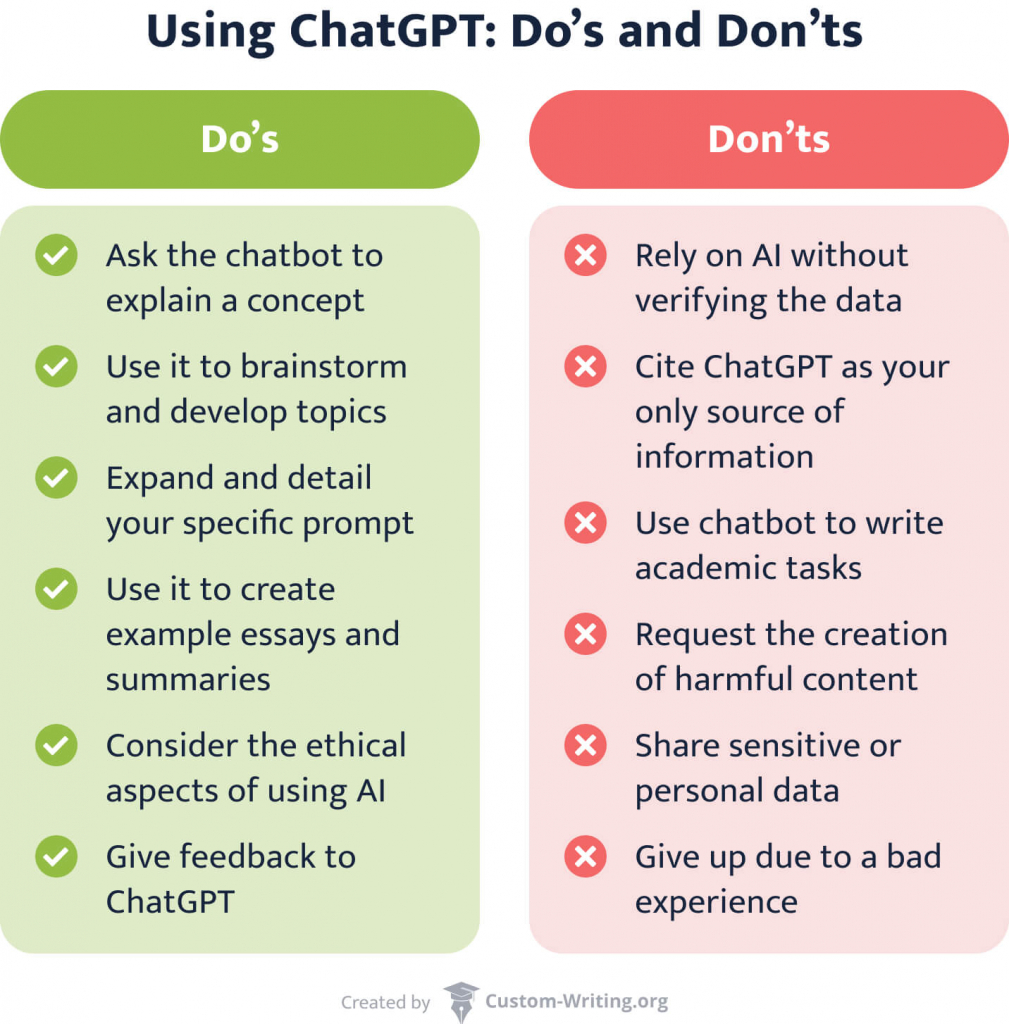

🤖 Artificial Intelligence: Do’s and Don’ts

Despite the fact that AI programs often have accuracy issues, they are still valuable tools for studying and working. The key is to learn how to use them correctly without forgetting the significance of preserving your privacy and the ethical aspects of using AI. ChatGPT is a versatile tool that can be used effectively in a variety of scenarios. You can utilize its features for homework by asking the chatbot:

- Explain complex rules or concepts in simple language.

- Generate new ideas for projects and discussions.

- Solve various math problems.

Experiment with your prompts, and don’t give up halfway if you fail to get it right the first time! Besides, don’t forget to check your academic papers before submission with our AI text finder or other handy detector.

While ChatGPT offers valuable help, students should not rely solely on its answers without checking the data and facts provided.

These recommendations may help you:

- Compare the information you receive from ChatGPT with credible sources.

- Cite ChatGPT properly and refrain from using chatbot as your only source of data.

- Avoid using the generated essays as a finished paper; consider them as an example.

We hope this article has helped you better understand where you can rely on ChatGPT and where you should be careful. If you are interested in learning more about the limits of this program, read about them here!

Check out other excellent materials about ChatGPT and similar AI tools:

- How to Use ChatGPT to Make a PowerPoint Presentation

- ChatGPT and College Essays

- Can I Get Caught Using ChatGPT?

- Is Using ChatGPT Cheating?

- 17 Best AI Tools for Students in 2026

- How Do AI Detectors Work?

- 17 Best AI Tools for Homework in 2026

🔗 References

- ChatGPT – OpenAI

- Chat GPT: What is it? – University of Central Arkansas

- ChatGPT: Everything you need to know about OpenAI’s GPT-4 tool – Alex Hughes, Science Focus, BBC

- GPT-4: Is the AI behind ChatGPT getting worse? – Jeremy Hsu, New Scientist

- Is ChatGPT Getting Worse? An In-Depth Analysis of Its Changing Performance – Daniel Crompton, LinkedIn

- What Kind of Mind Does ChatGPT Have? – Col Newport, The New Yorker

- How Accurate Is ChatGPT In Providing Information Or Answers? – Botpress

- ChatGPT vs. Bing vs. Google Bard: Which AI Is the Most Helpful? – Imad Khan, CNET

- Thinking about ChatGPT? – The University of British Columbia

- ChatGPT: Academic Integrity – Sarah Lawrence College

- How you should—and shouldn’t—use ChatGPT as a student – Open Universities Australia