ChatGPT and other AI applications are gaining momentum as helpful tools in education, research, and business. At the same time, the need to understand the full spectrum of AI ethics is becoming more pressing.

Here’s a recent example:

Lensa AI was trained on a vast database of real people’s photos and creative works of artists from around the world, which is a direct infringement of copyright, privacy, and intellectual property. Like Lensa, all AI apps are trained on real-world data. The boundary between ethical and unethical usage of artificial intelligence is blurred, which raises serious concerns.

In this article, our best custom writing experts will cover the most pressing ethical issues related to ChatGPT and AI in academia. We will focus on privacy and bias concerns and discuss the aspects of academic integrity in the context of AI usage.

😱 The Most Pressing Ethical Issues of ChatGPT & AI

Artificial intelligence is a rapidly evolving field surrounded by controversy. Opinions about AI’s potential benefits and threats vary greatly among the general public and the leaders of technological progress.

For instance, Bill Gates is optimistic about AI advancements, believing it will free up people’s time and improve our quality of life. At the same time, Elon Musk sees artificial intelligence as the most significant risk to the future of human civilization. His views align with those of Stephen Hawking, who suggested during his lifetime that AI could threaten humanity’s existence.

Even if we accept that Musk’s and Hawking’s evaluations are too pessimistic, it’s impossible to deny that the development of AI regulations and laws lag far behind the pace of technological advancements in this area.

The most pressing ethical issues related to AI use include:

- AI-related privacy concerns,

- Biased algorithms,

- Academic integrity issues,

- The problem of copyright.

Let’s examine them in more detail.

AI & Privacy

Machine learning algorithms are trained on texts like research articles, social media posts, and even customer information from online stores. It’s done without the consent of the data owners, which is unethical and even dangerous since the information can be used for surveillance or identity theft.

Since AI is widely used in marketing to personalize customer experience, there’s a risk of exposing too much data to AI during your regular online activities.

AI & Bias

AI algorithms are not trained to present data objectively. They contain large databases of potentially biased material and tend to reproduce these biases in the generated output.

With so much online data featuring prejudiced representations of disadvantaged groups (e.g., African Americans, women, and LGBTQ+ community representatives,) you can expect similarly biased ChatGPT content. Those who mindlessly rely on AI may end up perpetuating stereotypes related to underrepresented and marginalized populations.

AI & Academic Integrity

The most pressing issue of using ChatGPT for academic content is that its output can’t be called plagiarism in the classical sense of this word since you’re not stealing from a specific author directly. It’s also impossible to detect AI-generated texts with 100% certainty. Skillfully edited ChatGPT content may be passed as an original text, which is unacceptable and unethical.

The scale of academic dishonesty is alarming: a quick Google search for standard ChatGPT phrases like “as an AI language model…” would yield many AI-generated articles published in reputable scholarly publications. This means “peer-reviewed” journals are flooded with mindlessly generated content that wasn’t even proofread.

AI & Copyright Issues

The problem of academic integrity is directly related to the issue of copyright in the age of AI. In the case of Lensa, artworks used to train the algorithm weren’t given proper credit. Artists weren’t asked for explicit consent to use their products for AI training. Neither were they paid for the use of their works.

This issue would’ve been adequately addressed if a regular website had used the artworks, but with ChatGPT, things are very different. The ethical ways of getting data for machine learning are still on the AI regulators’ agenda, with no evident solutions in sight.

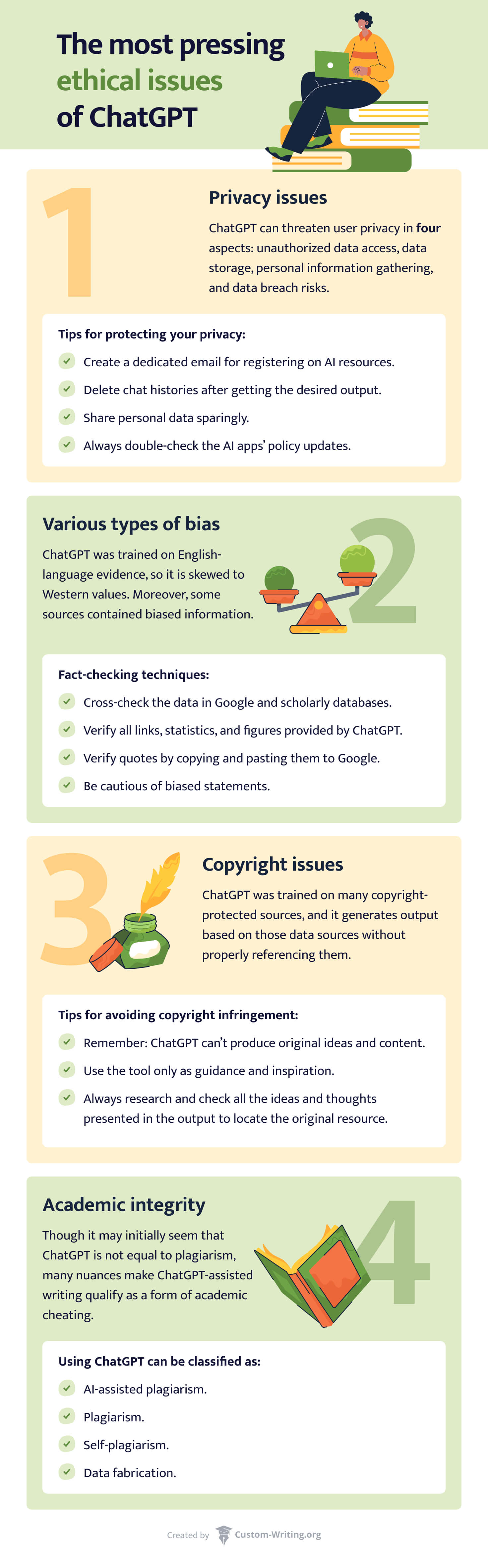

All of these issues will be discussed in more detail in the following sections. Here is a short yet informative infographic that summarizes the tips below.

🕵️ ChatGPT: Privacy Concerns

Considering how ChatGPT can threaten user privacy, there are four aspects to consider:

- Unauthorized data access. OpenAI’s crawler has been cruising Google for many years, collecting information from online resources without express permission. As a result, the chatbot’s database is likely to contain sensitive data that users never consented to share with it.

- Data storage. Whenever you share information with the chatbot as input, it gets stored for future training. While general inquiries are pretty harmless, more advanced AI usage can pose serious privacy threats. For example, a doctor who uses ChatGPT for patient notes or an accountant generating a tax report compilation puts client data in danger.

- Information gathering. All users of ChatGPT should also know that the program collects data about their IP addresses, browser types, and preferences. Though it’s not classified as personally identifiable information, it may still be manipulated in ways users would disagree with.

- Data breach risks. Like other digital resources, ChatGPT is constantly threatened with hacks and breaches. There is always a risk of having your data leaked from AI programs to criminals.

Not everyone thinks about these aspects when feeding their personal details into the algorithm or sharing their device information. But is there a way to avoid potential privacy breaches? Keep reading to learn the answer!

Tips for Protecting Your Privacy

Luckily, there are plenty of practical measures that can minimize your exposure to AI tools’ privacy risks. These digital literacy skills will come in handy whenever you interact with information technology:

- Improve your anonymity in AI-based apps. One way to do it is to create a dedicated email for registering on such resources. The email shouldn’t be linked to your business, financial, or social activities.

- Delete chat history after getting the desired output. Chats are saved automatically, but you can easily remove the conversation after you’re done with it by clicking “Delete chat”.

- Share personal data sparingly. Avoid giving ChatGPT more information than it needs to generate a response. If you want to proofread and edit a sensitive document or an original, unpublished manuscript, it’s best to do it yourself instead of entrusting the task to AI. To make the process easier, you can use our handy Read My Paper tool that doesn’t save the pasted text.

- Always double-check policy updates. Read the data management policies of AI-based apps and ensure you understand how they store your data. If there’s anything fishy about them, it’s best to switch to other apps.

⚖️ ChatGPT and Various Types of Bias

ChatGPT is inherently prone to bias, as OpenAI’s Help Center recognizes. There are several reasons for that:

- The algorithm was trained predominantly on English-language material, so it is skewed to Western philosophy and values.

- The chatbot was trained on an available mass of data that isn’t necessarily objective. Many of the sources it used contained biased information or presented certain subjects in a discriminatory manner.

- AI can’t differentiate in terms of objectivity or potential harm caused by biased output.

Let’s now examine the most prominent examples of biases found in ChatGPT. We will also discuss how you can ensure objective results.

Gender Bias Example

Women became substantially represented in typically masculine fields only several decades ago, and the chatbot was partially trained on texts from before that time. In particular, it has absorbed many discriminatory and prejudicial judgments about gender. As a result, ChatGPT may give sexist responses to questions such as “What is the gender of a nurse?” (“female”) and “What positive qualities do women employees possess?” (“grace and beauty”). Research also shows that GPT models associate men with greater competency and more advanced education levels.

Political Bias Example

Researchers from the Manhattan Institute have noticed ChatGPT’s skewness towards liberal Western values. Their study has revealed the prioritization of leftist views in ChatGPT output, with censorship of conservative content and tolerance towards right-wing radical view expressions.

The AI also reproduced the long-held bias of middle- and lower-class individuals representing certain demographic characteristics. These observations have led scholars to conclude that ChatGPT follows the socioeconomic bias characteristic of the left wing.

Fact-Checking Techniques

There is an officially recognized term for artificial intelligence making things up – a hallucination. AI developers are still wrecking their brains about how to stop it from happening, but the fact remains:

Language models can produce erroneous content with fake names, dates, and cases that never existed. That’s why it’s your responsibility to conduct thorough fact-checking whenever you plan to use AI-generated content in your writing.

Here are proven techniques to help you ensure the objectivity of generated data:

- Cross-check the information in Google, government publications, and scholarly databases.

- Verify all references provided by ChatGPT. See whether they relate to actual sources.

- Check all dates and names from the chatbot’s output to ensure they are correct.

- Verify quotes by pasting them into Google search in quotation marks. This will show you exact matchings along with the source of the quotation (if it exists.)

- Double-check statistics or figures ChatGPT mentioned in its reply. They are highly likely to be incorrect, so it’s better to look them up online.

- Be cautious of biased statements. We recommend using multiple sources to get an objective view of the issue.

📚 ChatGPT: Copyright Issues

The issues ChatGPT creates in terms of intellectual property are complex and controversial. There are at least two reasons for that:

- The chatbot was trained on many copyright-protected sources. It constantly generates output based on such data sources without adequately referencing them.

- Ironically, it’s the user who will be liable for IP infringements if they use copyright-protected output in their texts.

Unfortunately, all AI-generated content may be potentially infringing copyright. With ChatGPT giving no accurate attribution to the source from which it derived data to generate its output, the user is always at risk of facing plagiarism accusations.

Tips for Avoiding Copyright Infringement

The issue of AI plagiarism is very serious, but there is a way to avoid getting into trouble. Remember the following whenever you work with generated content:

- ChatGPT is unable to produce original ideas. It can only rehash what has already been published.

- All facts and ideas in the chatbot’s output should be additionally researched and fact-checked. Ideally, locate the source from which they were initially taken.

- It’s vital to treat AI-generated content responsibly and ethically, using it only as guidance and inspiration and not as publication-ready material.

🎓 ChatGPT and Academic Integrity

Academic integrity is the cornerstone of the global research community. But if you look at the scope of ChatGPT-generated publications in peer-reviewed journals, you can see how much unethical AI users undermine the principles of integrity.

If professors and researchers resort to a chatbot as a source of academic content, what can we possibly expect from students? Many of them use AI to cheat without even realizing it. But fortunately, there is a way to use ChatGPT without violating any rules.

Keep reading to learn how to deal with AI tools ethically and responsibly.

How Can ChatGPT Be Used to Cheat?

The major problem with students’ use of AI is a false belief that it can substitute independent intellectual labor. Many of them erroneously rely on ChatGPT and other AI-based tools, like a ChatGPT essay writer, as a free and quick essay-making machine. Such students face severe educational repercussions and penalties instead of getting high grades.

It may seem that using the chatbot’s response in your paper is not equal to plagiarism. However, since language models produce their outputs based on existing texts without any effort from the user, their application in writing is regarded as a form of academic cheating.

There are several ways in which it can occur:

- AI-assisted plagiarism happens when students submit a generated text and say it’s their own writing.

- Plagiarism occurs whenever you ask ChatGPT to rephrase an existing piece and pass it as your intellectual product.

- Self-plagiarism happens when you use ChatGPT to rewrite your past papers in different words and submit them repeatedly.

- Data fabrication involves making up scientific evidence with the help of ChatGPT and presenting it as part of your primary research.

The Risks of Academic Cheating with ChatGPT

The consequences of being caught cheating with ChatGPT differ among educational institutions. Students may face probation, fail a class, or receive grade penalties. But the most significant risk of academic cheating is getting expelled from a college or university.

It’s not an exaggeration: most schools have very strict policies regarding plagiarism of any kind, AI included. That’s why students have every chance to be driven out of the academic institution with a badly stained reputation when they use generated content unethically.

In addition to the educational and reputational damages, misuse of ChatGPT’s functions causes harm to the cheater and even entire communities:

- If you rely too much on AI, you deprive yourself of valuable learning opportunities.

- Receiving ready-made answers causes your critical thinking skills to decline.

- Taking ChatGPT’s outputs at face value without proper fact-checking can cause damage to the academic field and may even pose danger to people’s well-being.

As you can see, taking the risk is just not worth it. The only benefit you will achieve with ChatGPT-assisted cheating is having a little more free time, although you will likely lose much more time dealing with the consequences. In short, don’t try it.

👍 Tips for Using ChatGPT Responsibly

The good news is that not all uses of ChatGPT are as dangerous and unethical as some alarmists claim. In an average student’s reality, AI is a helpful study buddy and nothing more. It can assist with various aspects of homework, help extracting key information, aid with finding a research direction, and perform many other functions without causing any trouble.

The key to responsible AI use is to understand the difference between what’s ethical and what’s unethical. Treating the chatbot’s outputs as guidance and inspiration for your own work is entirely legal and even beneficial. For example, it’s okay to use a tool like a quote meaning generator to understand a citation better, but copying its output into your essay is forbidden.

Want to know more? Keep reading to learn actionable tips for using AI responsibly.

Follow Your Institution’s Policy

All students of a specific school are bound by its academic conduct and integrity code. This policy typically lays out the principles of ethical conduct, including the complete exclusion of plagiarism.

After ChatGPT was released, most schools revised their policies to include AI usage. This aspect of education is still relatively new, and different institutions have varying views regarding the ethics of ChatGPT in education. Some count it as plain plagiarism, others view submitting generated works as falsification, and yet others see it as “unauthorized assistance”. Many schools require running each submitted paper through an AI essay checker. The consequences of cheating also differ, with some colleges being more lenient than others.

To avoid breaking any rules, study your school’s policy and make sure you understand it. It’s also a good idea to talk to your professors about their stance on AI use. For example, some may punish students for using ChatGPT simply because an AI detector erroneously located generated content in their text, while others would conduct a more thorough examination and give the accused another chance.

Acknowledge Your Use of AI

Did you know that acknowledging ChatGPT usage outright can save you from a lot of trouble? Although AI doesn’t count as a legitimate source of information, you can mention its assistance at particular stages of work.

Honesty is a key component of academic integrity. Being open about the ethical use of AI tools is good for your reputation, and it could also save you from trouble in case you get falsely accused of cheating.

There has yet to be a uniformly accepted rule for citing ChatGPT as a writing aid, but some educational institutions are already developing their guidelines. For example, one option is to write a sentence like this in the Methods or Acknowledgements section:

Example:

ChatGPT was used to assist in the formulation of this study’s research question.

More specific guidelines will likely follow as the usage of chatbots gets more regulated.

Conduct Fact-Checking

Even if AI-generated output looks plausible, it’s likely to be made up. For example, the algorithm can stitch together the names of several authors, invent a book title, add a generic description, and present it to you as a legit source. It also has a habit of presenting non-existent quotes and statistics without marking them as such. Even generated summaries can have erroneous information in them.

It goes without saying that fact-checking should accompany every step of your work with ChatGPT. Always do a quick Google search for the names, facts, and other information the chatbot presents, even when you use it to summarize a text. This way, you will ensure your data is correct and precise.

💡 Pro tip:

If you need to quickly extract the main points from an academic article or any other source, try using tools like key points generator or textbook to notes converter. They will create a 100% correct summary of any text.

Use AI to Get Inspired

Like any other source of assistance, using ChatGPT is legal unless you ask it to compose whole essays and other academic papers for you and don’t copy and paste generated content into your texts. It’s okay to use it in many other ways, such as:

- To create a sample research question,

- To narrow down the focus of your study,

- To get inspired by essay examples,

- To develop slides for a PowerPoint presentation,

- To single out interesting arguments for discussion.

In other words, using ChatGPT for inspiration is completely normal if you understand its limitations and don’t cross the line of ethics.

💡 Pro tip:

We also recommend using specialized AI tools for homework to boost your creativity and improve your studies.

Here are some of the best ones:

- An introduction maker will help you create an excellent opening paragraph for your paper.

- If you need to write an inspired speech, try our speech writer generator.

- Hook your readers from the start with our customizable attention grabber generator.

- A transition sentence generator can help instantly improve the flow of any text, from academic papers to blog posts.

✅ Key Takeaways

As you can see, ChatGPT is a versatile tool that can be used either ethically or unethically. It can seriously stain your academic reputation if used carelessly and without proper acknowledgment. At the same time, its numerous features and functions can be beneficial for any student as long as they’re used responsibly.

Thank you for reading!

We hope our article helped you learn how to use ChatGPT effectively without getting into trouble. If so, feel free to share it with your friends or leave us a comment below. And if you want to improve your academic experience even further, check out more of our articles!

Check out other excellent materials about ChatGPT and similar AI tools:

🔗 References

- The Ethics of College Students Using ChatGPT: The University of North Carolina at Chapel Hill

- Generative AI Ethics: 8 Biggest Concerns and Risks: TechTarget

- Chatting and Cheating: Ensuring Academic Integrity in the Era of ChatGPT: Taylor & Francis Online

- Combating Academic Dishonesty, Part 6: ChatGPT, AI, and Academic Integrity: University of Chicago

- The Ethics of ChatGPT — Exploring the Ethical Issues of an Emerging Technology: ScienceDirect

- AI Ethicist Views on ChatGPT: Forbes

- Embracing Creativity: How AI Can Enhance the Creative Process: New York University

- Copyright Chaos: Legal Implications of Generative AI: Bloomberg Law

- How Is ChatGPT Biased? Researchers Identify a Variety of Concerns: Interesting Engineering

- ChatGPT and privacy: What happens to your personal data?

- What Are AI Hallucinations? | IBM